Facebook employees warned company was failing to police hate speech

Facebook employees warned for years that it was failing to police abusive content and hate speech: Tech firm does not have enough staff and AI used isn’t up to the task, internal docs reveal

- Hate speech has proliferated on Facebook in at-risk volatile countries

- The company failed to hire foreign language staff to filter out dangerous posts

- There were fears it would lead to real-world violence, internal documents show

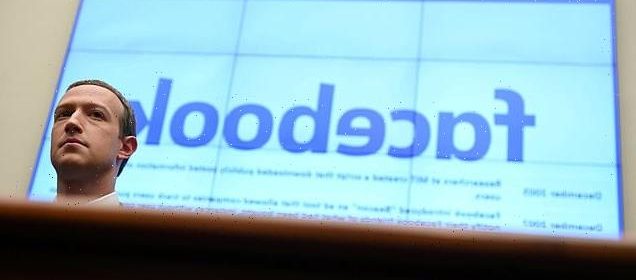

Facebook employees have warned for years that the company was failing to police abusive content in countries where it was likely to cause the most harm, interviews and internal documents reveal.

The tech giant has known it has not hired enough workers who possess the language skills or local knowledge to identify objectionable posts from users in developing countries, documents seen by Reuters show.

The artificial intelligence systems employed to root out the content are also not up to the task.

The failures to block hate speech in volatile regions such as Myanmar, the Middle East, Ethiopia and Vietnam could contribute to real-world violence, it has been claimed.

Facebook currently operates in more than 190 countries and boasts more than 2.8 billion monthly users who post content in more than 160 languages.

But in a review posted to Facebook’s internal message board last year regarding ways the company identifies abuses, one employee reported ‘significant gaps’ in certain at-risk countries.

The documents are among a cache of disclosures made to the US Securities and Exchange Commission and Congress by Facebook whistleblower Frances Haugen, a former Facebook product manager who left the company in May.

Facebook employees have warned for years that the company was failing to police abusive content in countries where it was likely to cause the most harm

Reuters was among a group of news organizations able to view the documents, which include presentations, reports and posts shared on the company’s internal message board. Their existence was first reported by The Wall Street Journal.

Facebook spokesperson Mavis Jones said in a statement that the company has native speakers worldwide reviewing content in more than 70 languages, as well as experts in humanitarian and human rights issues.

She said these teams are working to stop abuse on Facebook’s platform in places where there is a heightened risk of conflict and violence.

‘We know these challenges are real and we are proud of the work we’ve done to date,’ Jones said.

Still, the cache of internal Facebook documents offers detailed snapshots of how employees in recent years have sounded alarms about problems with the company’s tools – both human and technological – aimed at rooting out or blocking speech that violated its own standards.

The material expands upon Reuters’ previous reporting on Myanmar and other countries where the world’s largest social network has failed repeatedly to protect users from problems on its own platform and has struggled to monitor content across languages.

The documents are among a cache of disclosures made to the US Securities and Exchange Commission and Congress by Facebook whistleblower Frances Haugen (pictured)

Among the weaknesses cited were a lack of screening algorithms for languages used in some of the countries Facebook has deemed most ‘at-risk’ for potential real-world harm and violence stemming from abuses on its site.

Apple threatened to pull Facebook and Instagram from its app store over fears it was being used to traffick Filipina maids in the Middle East

Two years ago, Apple threatened to pull Facebook and Instagram from its app store over concerns about the platform being used as a tool to trade and sell maids in the Mideast.

After publicly promising to crack down, Facebook acknowledged in internal documents obtained by The Associated Press that it was ‘under-enforcing on confirmed abusive activity’ that saw Filipina maids complaining on the social media site of being abused.

Apple relented and Facebook and Instagram remained in the app store.

But Facebook’s crackdown seems to have had a limited effect.

Even today, a quick search for ‘khadima,’ or ‘maids’ in Arabic, will bring up accounts featuring posed photographs of Africans and South Asians with ages and prices listed next to their images.

That’s even as the Philippines government has a team of workers that do nothing but scour Facebook posts each day to try and protect desperate job seekers from criminal gangs and unscrupulous recruiters using the site.

While the Mideast remains a crucial source of work for women in Asia and Africa hoping to provide for their families back home, Facebook acknowledged some countries across the region have ‘especially egregious’ human rights issues when it comes to laborers’ protection.

‘In our investigation, domestic workers frequently complained to their recruitment agencies of being locked in their homes, starved, forced to extend their contracts indefinitely, unpaid, and repeatedly sold to other employers without their consent,’ one Facebook document read. ‘In response, agencies commonly told them to be more agreeable.’

The report added: ‘We also found recruitment agencies dismissing more serious crimes, such as physical or sexual assault, rather than helping domestic workers.’

In a statement to the AP, Facebook said it took the problem seriously, despite the continued spread of ads exploiting foreign workers in the Mideast.

‘We prohibit human exploitation in no uncertain terms,’ Facebook said. ‘We’ve been combating human trafficking on our platform for many years and our goal remains to prevent anyone who seeks to exploit others from having a home on our platform.’

The company designates countries ‘at-risk’ based on variables including unrest, ethnic violence, the number of users and existing laws, two former staffers told Reuters.

The system aims to steer resources to places where abuses on its site could have the most severe impact, the people said.

Facebook reviews and prioritizes these countries every six months in line with United Nations guidelines aimed at helping companies prevent and remedy human rights abuses in their business operations, spokesperson Jones said.

In 2018, United Nations experts investigating a brutal campaign of killings and expulsions against Myanmar’s Rohingya Muslim minority said Facebook was widely used to spread hate speech toward them.

That prompted the company to increase its staffing in vulnerable countries, a former employee told Reuters.

Facebook has said it should have done more to prevent the platform being used to incite offline violence in the country.

Ashraf Zeitoon, Facebook’s former head of policy for the Middle East and North Africa, who left in 2017, said the company’s approach to global growth has been ‘colonial,’ focused on monetization without safety measures.

More than 90 per cent of Facebook’s monthly active users are outside the United States or Canada.

Facebook has long touted the importance of its artificial-intelligence (AI) systems, in combination with human review, as a way of tackling objectionable and dangerous content on its platforms.

Machine-learning systems can detect such content with varying levels of accuracy.

But languages spoken outside the United States, Canada and Europe have been a stumbling block for Facebook’s automated content moderation, the documents provided to the government by Haugen show.

The company lacks AI systems to detect abusive posts in a number of languages used on its platform.

In 2020, for example, the company did not have screening algorithms known as ‘classifiers’ to find misinformation in Burmese, the language of Myanmar, or hate speech in the Ethiopian languages of Oromo or Amharic, a document showed.

These gaps can allow abusive posts to proliferate in the countries where Facebook itself has determined the risk of real-world harm is high.

Reuters this month found posts in Amharic, one of Ethiopia’s most common languages, referring to different ethnic groups as the enemy and issuing them death threats.

A nearly year-long conflict in the country between the Ethiopian government and rebel forces in the Tigray region has killed thousands of people and displaced more than 2 million.

Facebook spokesperson Jones said the company now has proactive detection technology to detect hate speech in Oromo and Amharic and has hired more people with ‘language, country and topic expertise,’ including people who have worked in Myanmar and Ethiopia.

Palestinian journalist Hassan Slaieh shows his blocked Facebook page during an interview in Gaza City

In an undated document, which a person familiar with the disclosures said was from 2021, Facebook employees also shared examples of ‘fear-mongering, anti-Muslim narratives’ spread on the site in India, including calls to oust the large minority Muslim population there.

Zuckerberg ‘personally decided company would agree to demands by Vietnmese government to increase censorship of ‘anti-state’ posts’

Mark Zuckerberg personally agreed to requests from Vietnam’s ruling Communist Party to censor anti-government dissidents, insiders say.

Facebook was threatened with being kicked out of the country, where it earns $1billion in revenue annually, if it did not agree.

Zuckerberg, seen as a champion of free speech in the West for steadfastly refusing to remove dangerous content, agreed to Hanoi’s demands.

Ahead of the Communist party congress in January, the Vietnamese government was given effective control of the social media platform as activists were silenced online, sources claim.

‘Anti-state’ posts were removed as Facebook allowed for the crackdown on dissidents of the regime.

Facebook told the Washington Post the decision was justified ‘to ensure our services remain available for millions of people who rely on them every day’.

Meanwhile in Myanmar, where Facebook-based misinformation has been linked repeatedly to ethnic and religious violence, the company acknowledged it had failed to stop the spread of hate speech targeting the minority Rohingya Muslim population.

The Rohingya’s persecution, which the U.S. has described as ethnic cleansing, led Facebook to publicly pledge in 2018 that it would recruit 100 native Myanmar language speakers to police its platforms.

But the company never disclosed how many content moderators it ultimately hired or revealed which of the nation’s many dialects they covered.

Despite Facebook’s public promises and many internal reports on the problems, the rights group Global Witness said the company’s recommendation algorithm continued to amplify army propaganda and other content that breaches the company’s Myanmar policies following a military coup in February.

‘Our lack of Hindi and Bengali classifiers means much of this content is never flagged or actioned,’ the document said.

Internal posts and comments by employees this year also noted the lack of classifiers in the Urdu and Pashto languages to screen problematic content posted by users in Pakistan, Iran and Afghanistan.

Jones said Facebook added hate speech classifiers for Hindi in 2018 and Bengali in 2020, and classifiers for violence and incitement in Hindi and Bengali this year. She said Facebook also now has hate speech classifiers in Urdu but not Pashto.

Facebook’s human review of posts, which is crucial for nuanced problems like hate speech, also has gaps across key languages, the documents show.

An undated document laid out how its content moderation operation struggled with Arabic-language dialects of multiple ‘at-risk’ countries, leaving it constantly ‘playing catch up.’

The document acknowledged that, even within its Arabic-speaking reviewers, ‘Yemeni, Libyan, Saudi Arabian (really all Gulf nations) are either missing or have very low representation.’

Facebook’s Jones acknowledged that Arabic language content moderation ‘presents an enormous set of challenges.’ She said Facebook has made investments in staff over the last two years but recognizes ‘we still have more work to do.’

Three former Facebook employees who worked for the company´s Asia Pacific and Middle East and North Africa offices in the past five years told Reuters they believed content moderation in their regions had not been a priority for Facebook management.

These people said leadership did not understand the issues and did not devote enough staff and resources.

Facebook’s Jones said the California company cracks down on abuse by users outside the United States with the same intensity applied domestically.

The company said it uses AI proactively to identify hate speech in more than 50 languages.

Facebook said it bases its decisions on where to deploy AI on the size of the market and an assessment of the country’s risks. It declined to say in how many countries it did not have functioning hate speech classifiers.

Facebook also says it has 15,000 content moderators reviewing material from its global users. ‘Adding more language expertise has been a key focus for us,’ Jones said.

In the past two years, it has hired people who can review content in Amharic, Oromo, Tigrinya, Somali, and Burmese, the company said, and this year added moderators in 12 new languages, including Haitian Creole.

Facebook declined to say whether it requires a minimum number of content moderators for any language offered on the platform.

Facebook’s users are a powerful resource to identify content that violates the company’s standards.

The company has built a system for them to do so, but has acknowledged that the process can be time consuming and expensive for users in countries without reliable internet access.

The reporting tool also has had bugs, design flaws and accessibility issues for some languages, according to the documents and digital rights activists who spoke with Reuters.

Next Billion Network, a group of tech civic society groups working mostly across Asia, the Middle East and Africa, said in recent years it had repeatedly flagged problems with the reporting system to Facebook management.

Those included a technical defect that kept Facebook’s content review system from being able to see objectionable text accompanying videos and photos in some posts reported by users.

That issue prevented serious violations, such as death threats in the text of these posts, from being properly assessed, the group and a former Facebook employee told Reuters. They said the issue was fixed in 2020.

Facebook said it continues to work to improve its reporting systems and takes feedback seriously.

Language coverage remains a problem. A Facebook presentation from January, included in the documents, concluded ‘there is a huge gap in the Hate Speech reporting process in local languages’ for users in Afghanistan.

The recent pullout of U.S. troops there after two decades has ignited an internal power struggle in the country. So-called ‘community standards’ – the rules that govern what users can post – are also not available in Afghanistan’s main languages of Pashto and Dari, the author of the presentation said.

A Reuters review this month found that community standards weren’t available in about half the more than 110 languages that Facebook supports with features such as menus and prompts.

Facebook said it aims to have these rules available in 59 languages by the end of the year, and in another 20 languages by the end of 2022.

Source: Read Full Article